Leveraging a Platform as a Service (PaaS) is a great way to quickly build, innovate, and deploy a new product or service. By leveraging a PaaS vendor’s servers, load balancing, application scaling, and 3rd-party integrations, your engineering team can focus on building customer-facing features that add value to your business.

There comes a point, however, where many organizations outgrow their one-size-fits-all PaaS. Some common reasons include:

- Performance

- Cost Efficiency

- Control

- Reliability

- Platform Limitations

We recently completed a migration for one such client from Heroku to Kubernetes on AWS.

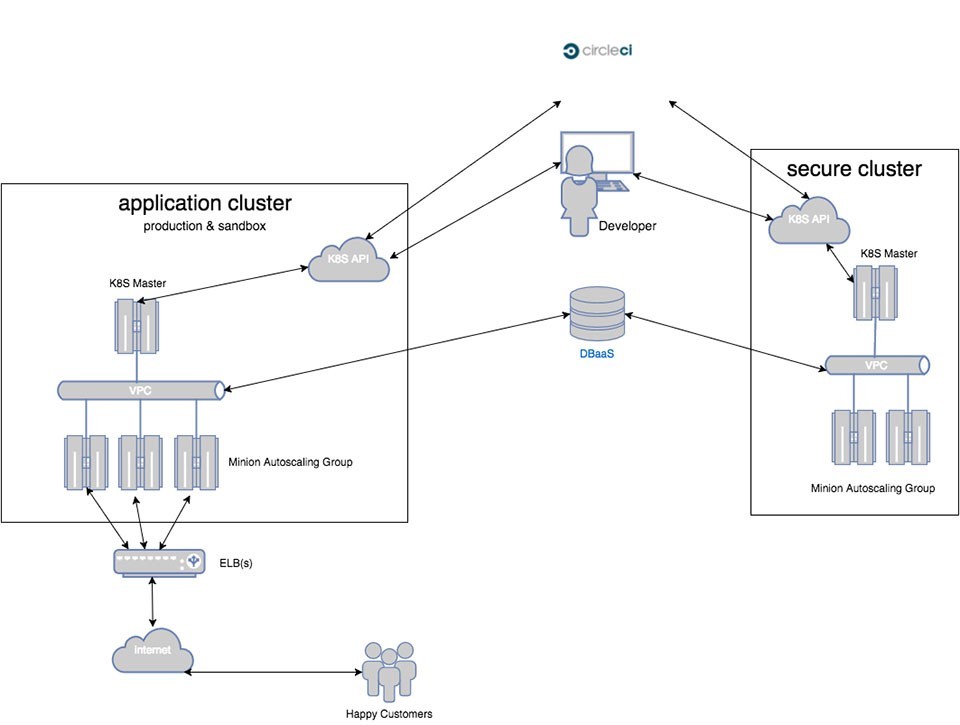

This client had seen phenomenal growth and was struggling with some of the constraints and performance issues with a true PaaS. In addition, they had requirements to run sensitive workloads that are completely isolated from their normal application workload, and the PaaS provider couldn’t accomodate this need.

Their previous architecture leveraged Heroku for client-facing websites and APIs and a separate instance on a leading managed hosting provider to handle their secure transactions. Having to manage two infrastructure stacks as well as independent logging, monitoring, and deployment pipelines was slowing their development process.

Finally, they were in the processes of breaking up several monolith applications into a collection of microservices. These microservices would enable increased code reuse and reduced application complexity. Our client knew that microservices shift complexity from applications to the infrastructure and wanted an implementation that would provide critical features like service discovery, traffic routing, and secret management.

Goals

Besides relying on our fully-managed DevOps-as-a-Service, our client’s technological requirements were to have a modern cloud architecture including:

- Consolidated infrastructure using an elastic cloud platform like AWS

- Orchestration system supporting microservice architectures

- Two independent clusters: Production/Staging and Secure

- Centralized application logging, metrics, and alerting

- Application CI/CD pipeline

- Local development environment closely matching production

After a thorough evaluation of the existing infrastructure and legacy applications as well as their CTO’s vision for their next-generation applications, our team proposed a solution based on the open-source Kubernetes container orchestration engine deployed to Amazon Web Services.

Modern architectures with Kubernetes

If you haven’t heard of Kubernetes before, stop reading and go visit the project website.

Then read Why Kubernetes could be crowned king of container management. And Google Kubernetes Is an Open-Source Software Hit.

Back? The buzzwords are not a lie.

To summarize:

"Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications."

From an application developer point of view, Kubernetes provides a robust mechanism to easily deploy and applications (like you might with a PaaS), without the constraints and vendor lock-in of an actual PaaS. This is exactly what our client was looking for and was a perfect functionality fit.

Out of the box, Kubernetes provides a number of essential features for modern cloud architectures:

- Deployment methodologies for AWS, GCP, and Azure

- Container scheduling and orchestration

- Service discovery and load balancing

- Secret and configuration management

- Automated rolling deploys (and rollbacks!)

- Horizontal application autoscaling

- Application log collection

However, there are several operational capabilities that are not batteries-included with Kubernetes:

- Consistent AWS Deployment

- Build environment (CI/CD)

- Docker Registry

- Monitoring & Alerting

- External Logging

Production-ready Deployment

We already have plenty of experience working with tools and SaaS platforms to handle the capabilities not included with Kubernetes. We made our recommendations for this client based on what they already using elsewhere and which services provided the best value in terms of features and capabilities.

The completed deployment included:

- Amazon Web Services

- Application Logging using logspout & Papertrail

- Application and container monitoring and alerting using Datadog and PagerDuty

- Datadog has an awesome tool to generate the DaemonSet for your Kubernetes Cluster

- For this particular client, we are handling Level-2 pager duties.

- Continuous Integration and Continuous Deployment using CircleCI

- Build/test of PRs, master deploys to ‘staging’, GitHub releases ship to production

- Collection of tools to manage cluster lifecycle

- Cluster creation, deletion, state backup & import

- Quay.io’s excellent Docker Registry

- Integrated vulnerability scanning with Clair

- Customizable RBAC access including ‘robot’ accounts for Kubernetes & CircleCI.

- Local development workflow using minikube

The Clusters, In a Picture

Our client has been running their new infrastructure in staging for a few months now and has begun the production transition. Working with their engineering team, we have automated the process for deploying a new microservice to the Kubernetes infrastructure. What used to take a developer a few hours to over a day to hack into their old infrastructure, now takes less than 20 minutes - and the platform provides all the features to do it right.

Future Work

As we scale up their production workload, we are identifying several new requirements that we are folding into the Fairwinds DevOps-as-a-Service product:

- ChatOps to manage state of the Kubernetes cluster and application deployment lifecycle

- Improved Kubernetes deployment system including HA etcd & master

- Enhanced secret management system

- Pre-configured Kibana for use with the ElasticSearch-Logstash system built-into Kubernetes